Developers hate waiting. Broken builds at 2 a.m. break spirits—and sprint velocity. Today we fix that with QA Copilot CI, a drop-in CLI that fires off ai testing automation on every build and hands you green lights faster than your coffee can cool.

Why This Launch Matters

- Time is money – long pipelines burn both.

- Flaky selectors turn night shifts into whack-a-mole.

- Audit trails are mandatory for modern teams.

What Ships in v1 ✅

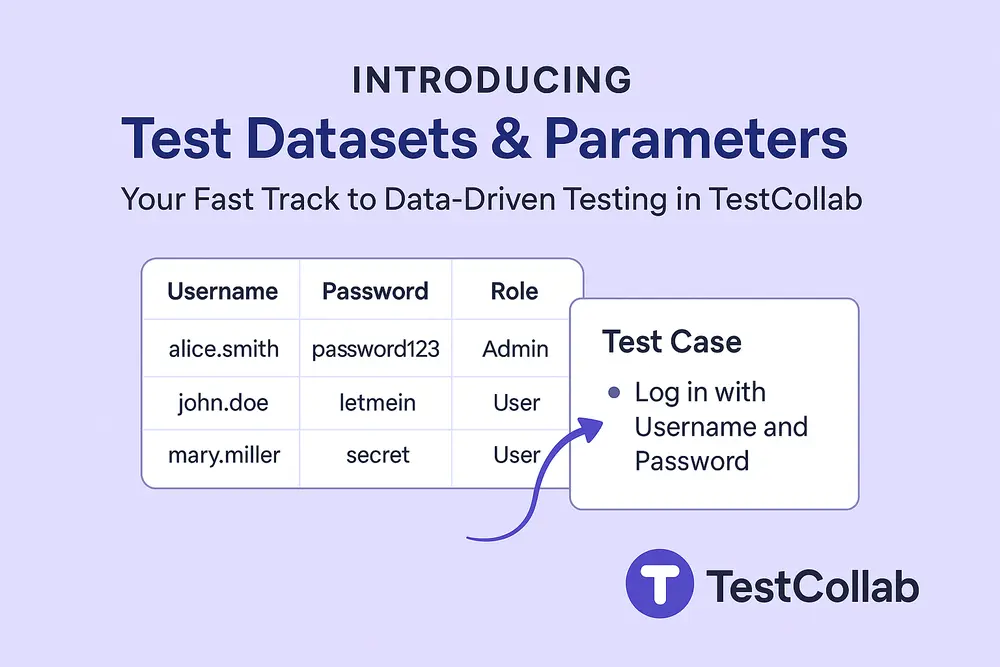

Plug-and-play hooks — GitHub, GitLab, Jenkins ready now.Auto-healing locators — DOM changes ≠ broken build.Smart retries — rerun just the flaky step, not the suite.Full-run video & logs — show, don’t tell reviewers.AI assertions — UI, images, and API payloads covered.Secrets vault & artifact TTL — zero leaks, tidy storage.30-Second Setup

Assuming you have QA Copilot enabled and trained on your web project.

npm install --save-dev qa-copilot-ci

# .github/workflows/qa.yml

run: |

qac --build ${{ github.run_id }} \

--app_url ${{ steps.deploy.outputs.app_url }} \

--tc_project_id ${{ vars.TC_PROJECT_ID }} \

--api_key ${{ secrets.TESTCOLLAB_API_KEY }}

Push. Watch ai testing automation kick off in the checks tab. Green bar? Merge. Red bar? Click the video, spot the issue, fix fast.

Metrics to Watch

- Median pipeline time – track the 50th-percentile runtime of your test stage; beta teams saw 35-50 % drops in week one.

- Flaky failure rate – percentage of CI runs that fail for non-code reasons; target < 2 %.

- Mean-time-to-repair (MTTR) – average minutes from red build to green; expect steep declines thanks to instant video/log evidence.

- Evidence completeness – proportion of failed runs with video + log artifacts attached; should be 100 %.

- Developer sentiment – quick retro poll (“How painful were tests this sprint?”); aim for an upward trend as ai testing automation takes hold.

What’s Next — You Tell Us 🚧

We’re starting with a blank scoreboard—none of these items are ranked yet. Jump into our private Slack, vote, and shape the order.

Get Involved — Join Our Private Slack — Join Our Private Slack

Want to steer the roadmap? We run all polls in #qa‑copilot‑roadmap on Slack.

Real pain beats “cool” every time—let us know what hurts most!

Ready to Ship with AI testing automation?

Sign up now → https://testcollab.com/qa-copilot (14‑day free trial, no card).

See how quickly Test Collab QA Copilot turns red builds green.