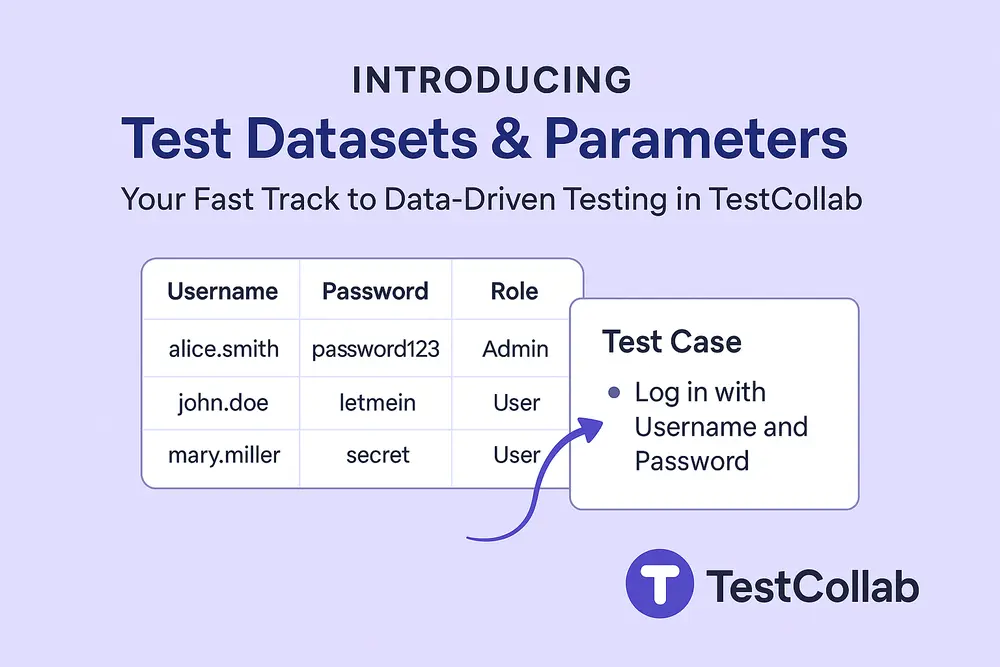

When you’re shipping features at sprint speed, test management grinds to a halt if you’re copying the same test case a dozen times just to cover new usernames or environment settings. Today we’re excited to roll out Test Datasets & Parameters, a pair of power-ups that let you keep logic and data separate, slash duplication, and turn any manual or automated script into a true data-driver testing machine.

Why we built it

A login flow that accepts three roles and two password patterns already needs six separate executions. Add language, browser, or region and that number skyrockets. Most teams handle this with extra spreadsheets or cloned cases—until maintenance becomes its own full-time job.

Datasets & Parameters solve the problem by letting you design one canonical test and feed it clean, table-driven test data on demand. No more hard-coded strings, no more version drift.

Key concepts at a glance

Term

What it means

Where you’ll see it

Parameter

A named placeholder wrapped in {{braces}} inside your steps—

e.g. {{username}}. At run-time TestCollab swaps it for real data.

Step editor & dataset tables

Test Dataset

A table that lists Parameters as columns and holds one or more rows of values.

Each row drives one iteration of the test.

Project → Settings → Test Datasets

Why this matters:

- Design once, reuse everywhere—no copy-paste.

- Track pass/fail per data row for instant insight.

- Update live data without touching the test logic.

Building your first dataset (it’s two clicks)

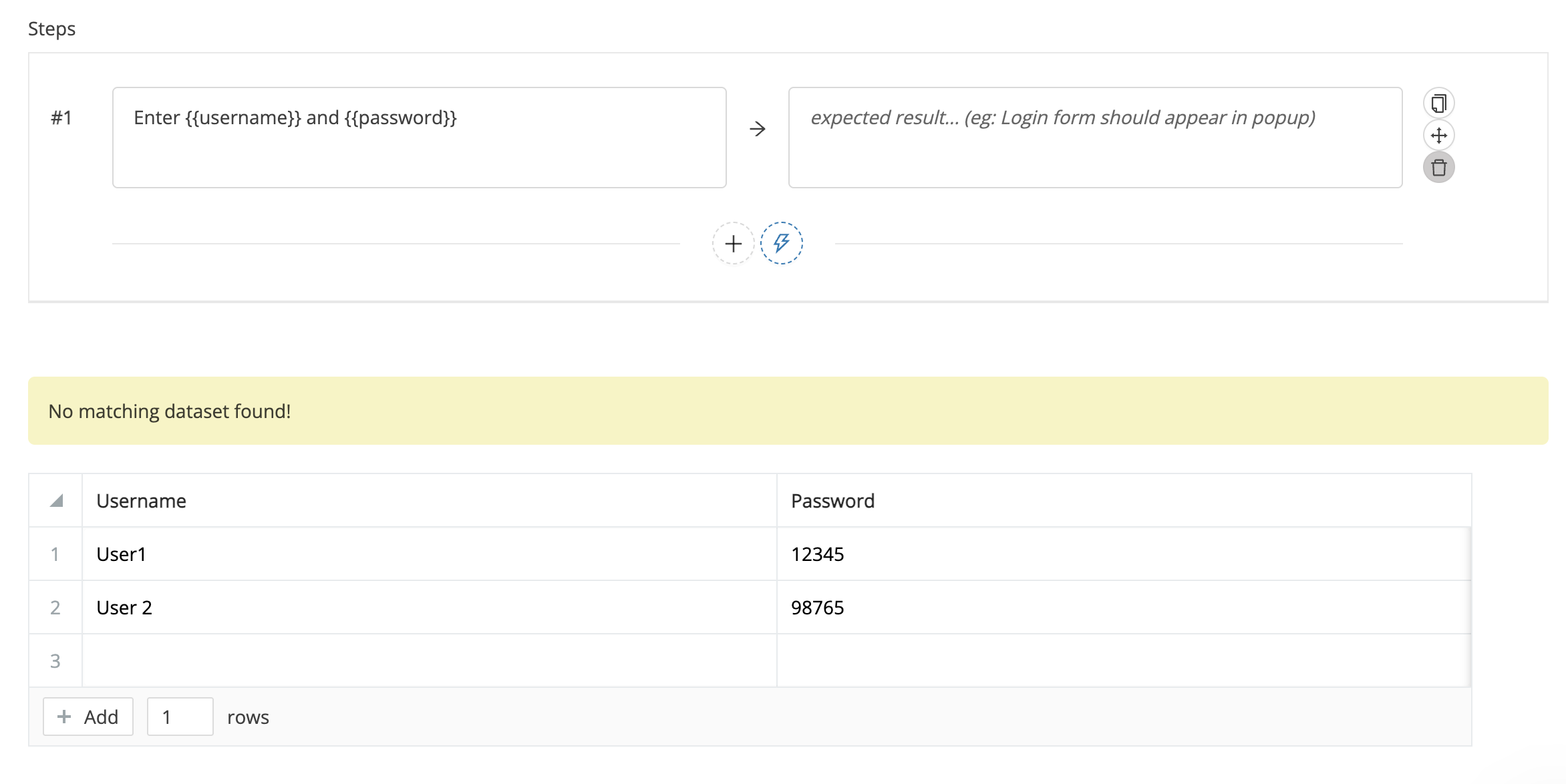

Tip: You can also create a dataset on the fly while editing a test case; just type {{parameter}} in any step and TestCollab spawns the column automatically.

Linking data to a test case

Open (or create) the test and replace hard-coded strings:

Below the editor, choose Select to reuse an existing dataset or Use as template to clone and tweak one. Edits you make here sync straight back to the project-level table—no duplicate copies to track.

Running the suite

Add the data-driven case to any Test Plan. At execution time every row in the linked dataset becomes its own iteration; testers see real values substituted inline, can pass/fail each row, attach evidence, and leave comments. Results are stored per iteration, so you know exactly which credential pair or config broke the build.

Historical runs keep their original data even if the dataset later changes, giving you perfect audit trails while still letting current plans pull the latest rows.

A lightning-quick example

Username

Password

Role

user1

P@ssw0rd

admin

user2

P@ssw0rd

tester

user1

Pa55word!

tester

user2

Pa55word!

admin

One dataset, one login test case. Four iterations automatically appear in your run—zero cloning, zero spreadsheets. Need to add a “viewer” role? Drop a new row into the table and you’re covered next run.

Getting started in under five minutes

Full how-to docs:

- Quick-start overview

- Creating & managing datasets

- Linking datasets to a case

- Running tests with datasets

Ready to try?

Head to any project, click Test Datasets, and start feeding your tests clean, reusable data. Your team—and your future self—will thank you. 🎉