We’ve been installing some upgrades to our release pipeline here at Test Collab. Such upgrades give you a good opportunity to find your past mistakes and eliminate them altogether. While doing so, I thought it’ll be good to share what I learned about continuous integration during lifetime of Test Collab and before.

Continuous Integration is a key element of your software. If not planned carefully and correctly, you’ll most likely have higher risk exposure to bad releases and bugs, resulting more costs on your business.

CI is a big investment but good for us that you can fix your screw ups easily. So here are some of the things I learned in past few years about CI/CD pipelines, feel free to agree, disagree or share your comments below (the list is randomly ordered):

1. Tools are temporary, do not get tied up to one tool

Avoid marrying a tool for tools are temporary. Well ideally speaking, CI tool should be.

Why? It might not seem much now, but you’ll be stuck for years with that old tool because migrating to a new one is huge job.

Storing long shell commands in Jenkins? You’re doing it wrong!

Instead, try:

Create small .sh files or .bat files, commit to your repo and call them when needed. Use environment variables for saving credentials.

This’ll help you to migrate to new tools very easily. Not only that, but you can run these files anywhere to carry out small tests.

2. Save build artifacts on a remote location (disc space finishes up really really fast)

If you’re spending a minute cleaning disc space, you did something wrong.

See what Joel Spolsky wrote about disc space 17 years ago:

“At my last job, the system administrator kept sending me automated spam complaining that I was using more than … get this … 220 megabytes of hard drive space on the server. I pointed out that given the price of hard drives these days, the cost of this space was significantly less than the cost of the toilet paper I used. Spending even 10 minutes cleaning up my directory would be a fabulous waste of productivity. https://www.joelonsoftware.com/2000/08/09/the-joel-test-12-steps-to-better-code/

Today with platforms like AWS you never have to worry about disc space. Your artifacts should go automatically to a cloud storage service or your enterprise server directly instead of filling up your CI server.

3. Package first, then run jobs

We made this mistake while building our first pipeline few years ago. It was a four-stage pipeline, and packages were generated late in pipeline, as late as third phase. As a result, we couldn’t trigger automated jobs and manually test builds until whole one hour was passed.

So, lesson learned? Generate packages first.

Sooner the packages are ready, dependent jobs can be called upon in any order.

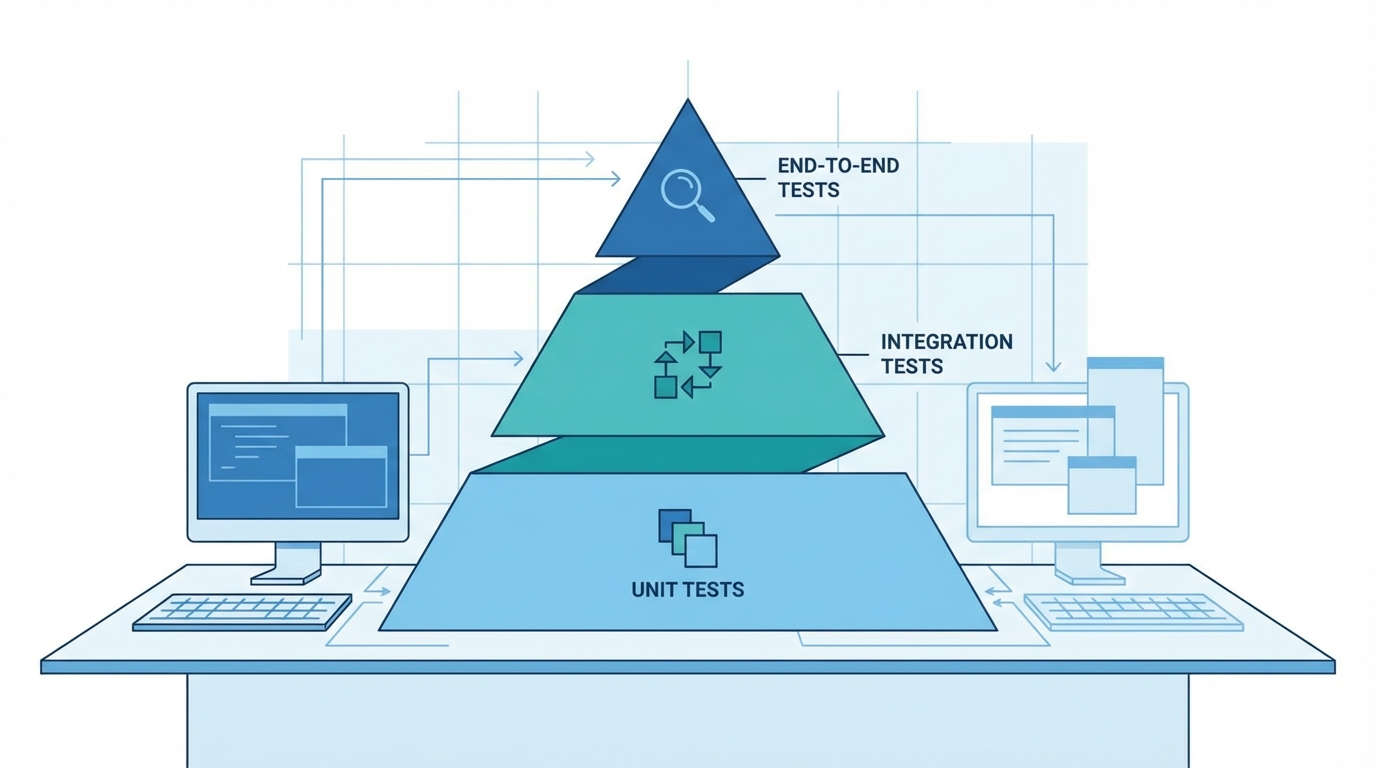

Exception here is unit testing. So packaging job should:

run your unit tests -> package -> publish to private space

4. Use separate VM or containers for each job

Not doing so will cost you lot of debugging hours, it’ll fail your build randomly and will leave you with unexpected unseen bugs, resolving which will be equivalent to working Sherlock Holmes’ cases.

What really happens if you don’t do it?

Your previously ran jobs alters a lot of stuff on system: packages, log files, system settings and what not. Maybe more stuff than you can keep track of.

So it is not possible to have a clean slate for a new job unless you’re using a fresh VM or a container. These modifications conflict with future builds and produces unexpected issues or random failures.

In our case, this mistake might’ve been the biggest expense from this list. Good thing is today we have so many build tools that enforces you to have separate containers for each build from day 1 and they’re really easy to implement too.

5. Parallel jobs are good, but implement only when needed

When I read Continuous Delivery for the first time (read it twice actually – it’s a good book) I was suddenly overwhelmed with the things we weren’t doing in our CI process. I immediately started putting in more effort to make things better and implemented more jobs and parallelization. Only I didn’t quite gauge of what future costs of hosting and maintenance would be, or why I was doing so.

Result? A lesson learned the hard-way, servers can easily cost more than your apartment rent. And only implement parallel jobs where it makes sense, where it actually saves time.

Not to mention, you have to maintain all these jobs in years to come.

6. CI/CD doesn’t mean no manual testing

Do not think that having world-class CI/CD pipeline would replace manual testing ever. Because it won’t. It may come close but it won’t.

Instead of thinking about elimination, integrate manual testing with your pipeline. Here’s one method we use:

We use TestCollab (yes, our own product) for our manual testing. We’ve tagged test cases which needs to run during different stage of the pipelines.

Since most of our projects have fairly simple pipeline as of now, process is simple:

As soon as staging setup job finishes up, we call Test Collab API to delegate test cases tagged with ‘pre-launch’ to our tester. This informs our testers that they’re expected to run ‘pre-launch’ test cases on a particular URL. After they wrap up testing, an email is sent to manager (me, in this case) with full report. I manually presses “OK” button to trigger “launch” job – which deploys everything and then once again Test Collab is called to create manual test execution request with ‘post-launch’ tag.

7. Unit tests are big investment when your project is new

I suggest to avoid it entirely until your code stabilizes. Unit tests are brittle and many times they can be replaced by automated acceptance tests.

When your project is in beginning phase, it might see a lot of rewrites, new libraries etc. In such cases, unit tests can be a huge overhead and there’s little-to-no ROI there.

Acceptance tests on the other hand are better candidates.

While building Load Xen we followed Test-driven-development and it didn’t really work for us, well for some parts at least. The primary reason was that major parts of code were being rewritten every week to counter new problem.

It's still a debatable thought, but you've been warned!

8. Build all branches, not just trunk/master

Another hugely important pointer here. I urge you, read this title once more, please. Thanks!

So what’s the problem if I just build master? All other branch’s failures are hidden from you before you merge.

Ideally we would like to keep all branches close to release-ready state. Without the pipeline, branches are an element of surprise for you. On the other hand, if you’re building all branches, you are confident about their quality.

This also saves time as you’ll see builds go green smoothly, one after another, even after merge!

Changes in branches, also affects manual testing, see how branches and manual testing can be handled in Test Collab.

9. Pipelines should be matured slowly instead of in one-go

Remember my mistake from #5? Chasing perfection can be good, and harmful too. Do not over-invest in your CI/CD pipeline. Solve current problems, leave the theoretical problems for future.

Here’s an excellent representation from Shippable team:

I suggest you to slowly mature into CI – CD model instead in one go. Assess where your project stands now, and what problems you’re facing today- and then move up the model.

Because to move up from one stage to another, will cost you and things will go wrong. You have to have good rationale behind the move.

One more thing: not all your projects will need to move up after certain stage.

Liked this article? Please share or comment your views. I’d love to hear from you.