Artificial Intelligence (AI) and Machine Learning (ML) models are increasingly integrated into software products, bringing new challenges for Quality Assurance (QA) professionals. One emerging innovation is the Model Context Protocol (MCP) – a technology designed to connect AI models with external data seamlessly. This article explains MCP in accessible terms and outlines what QA teams need to know, how to test MCP-based systems, and how tools like Test Collab can support these efforts.

What is the Model Context Protocol (MCP)?

MCP is an open standard that bridges AI models (like large language models) with the data and tools they need. In traditional setups, connecting a model to each data source required custom code or APIs, creating many fragmented integrations. MCP addresses this by providing one universal protocol for all such connections. Think of MCP as the USB-C of AI integrations – a single, standardized “plug” that fits all data sources and tools. Instead of dozens of bespoke connectors, developers (and testers) deal with one consistent interface.

Key points about MCP:

- Open Standard: MCP is openly published and designed for broad adoption. It was introduced as a standardized way to connect AI assistants to the systems where data lives. Companies like Anthropic have open-sourced MCP to encourage a community-driven ecosystem around it.

- Two-Way Connection: It enables secure, two-way communication between AI models and external data sources. An AI application can request information from an MCP-connected database or service, and that source can send context/data back to the AI. This ensures the model has the context it needs and can update or retrieve data as required.

- “Bring Your Own Data” Friendly: With MCP, organizations can hook up content repositories, business tools, development environments, and more to their AI models through a common protocol. This means AI systems are no longer “trapped behind information silos”, as every new data source no longer needs a custom integration.

- Consistent Context Management: MCP includes a context management system that maintains state and important information throughout a model’s operation. It prevents context from being lost mid-process (a known issue that could degrade an AI’s output quality) and keeps the model’s output aligned with the provided context. In simpler terms, MCP helps the AI remember and consistently use the relevant information, even as the interaction grows complex or lengthy.

Why it matters: By standardizing how AI applications receive context, MCP makes AI integrations simpler and more reliable. For QA professionals, this means systems built on MCP are intended to behave more predictably when accessing external data. As MCP gets adopted (early adopters include companies connecting tools like Google Drive, Slack, GitHub, etc., via MCP), QA teams are increasingly likely to encounter it in AI-powered products. Understanding MCP helps QA anticipate how an AI model interacts with external data and what new testing considerations come with that.

MCP’s Role in AI/ML Development and Testing Workflows

From a development perspective, MCP is becoming part of the AI model development stack. Developers use MCP to feed models with real-time context from various sources without reinventing the wheel each time. This has a few implications for QA and testing workflows:

- Standardized Interfaces: Because MCP replaces custom adapters with a standard protocol, QA testers can expect a more uniform behavior across different integrations. In the past, if a model pulled data from say, a database and a third-party API, each integration might behave differently. With MCP, the interactions follow one specification, making it easier to predict and test how the model fetches and uses data.

- Enhanced Relevance and Accuracy: Models using MCP can provide more relevant responses because they have timely access to the right data. For QA, this means when you test an AI feature, it's important to validate that the model is indeed retrieving the correct context and that the responses improve with that context. MCP’s design goal is to help AI produce “better, more relevant responses” by breaking down data silos. QA needs to verify that this promise holds true – e.g., does the customer support chatbot actually pull the latest knowledge base article when answering a query?

- Changes in Testing Scope: Testing an AI/ML model traditionally might focus on the model’s output given certain inputs. With MCP in the mix, the workflow of testing expands to include the context retrieval process. QA professionals must test not just the AI’s answer, but also that the MCP connection successfully provided the right context (and handled scenarios where context is missing or incomplete). This is akin to testing an integration or API in addition to the AI logic.

- Faster Iteration, New Features: Since MCP makes it easier for developers to connect new data sources, features can be added quickly (for example, hooking the AI into a new internal system via MCP). QA teams should be prepared for a faster pace of changes in AI capabilities. The testing workflow might need to accommodate quick integration of new context sources – for instance, having test cases ready for when the AI is connected to a new CRM or knowledge base via MCP.

In summary, QA professionals should know that MCP is becoming a critical part of how AI systems are built. It aims to eliminate a lot of the flaky, inconsistent integration behavior by introducing a unified approach. When an AI product in development mentions using MCP, testers should recognize that as a cue to focus on context-related testing and integration validation, alongside traditional functional testing of the AI.

Testing MCP-Based Systems: Challenges and Strategies

Testing systems that use MCP will introduce some unique challenges. Below we outline key challenges QA teams might face, followed by methodologies and best practices to tackle them.

Key Challenges in Testing MCP-Integrated Systems

- Dynamic Context and Data Variability: Because MCP allows AI models to fetch live data from various sources, the content the model sees can change frequently. This makes it challenging to create static test cases. Testers need to ensure the model responds correctly across different contexts (for example, different user profiles, documents, or records). Reproducibility can be tricky if the external data changes – QA may need to use fixed test data or snapshot versions of data sources for consistent results.

- Multiple Integration Points: An MCP-based system might connect to several external systems (databases, third-party services, file repositories, etc.) through the protocol. Each connection is standardized, but QA must still verify each one. Ensuring the AI properly handles context from each integrated source is vital. This means testing scenarios for each data source in isolation and in combination – for instance, does the system respond correctly when only the database is available versus when both database and file storage contexts are provided?

- Interoperability and Consistency: MCP’s goal is to maintain context across different tools so the AI can move seamlessly between them. QA should validate that consistency. A challenge here is ensuring that context from one source doesn’t conflict with another and that the handover is smooth. If an AI agent uses context from a project management tool and a code repository, testers should verify the AI consistently understands the combined context. Inconsistencies might appear as the AI ignoring one source or mixing up information, which need to be caught in testing.

- Security and Access Control: With great data access comes great responsibility. MCP connections will often involve sensitive data, so robust security is built into the protocol (e.g., permission controls and audit logs are part of MCP’s design). QA teams must test that security rules are respected. This includes verifying that the AI cannot access data it shouldn’t have permission for, that requests over MCP are authenticated, and that there’s proper logging of context access. For example, if an AI assistant is only supposed to fetch customer data relevant to the logged-in user, testers should attempt cross-user data access to ensure the system correctly denies it.

- Performance and Reliability: Adding external context fetching could impact performance. QA should watch for any slowdowns or timeouts when the AI requests data via MCP. There’s a challenge in ensuring the system remains reliable under load – e.g., if many users query the AI and it hits the MCP server heavily, does it still respond quickly and accurately? Also, testers should consider failure modes: if an MCP server (data source) goes down or returns an error, does the AI handle it gracefully (perhaps by responding with an apology or a fallback answer)? These scenarios need to be part of the test plan.

Testing Methodologies and Best Practices

Approaching testing for MCP-based systems will require a mix of traditional integration testing techniques and some AI-specific considerations. Here are some methodologies and best practices for QA teams:

- Integration Testing for Context: Treat the MCP interface as a critical integration to test. Develop test cases that specifically exercise the context retrieval layer. For instance, use a known set of data on an MCP server (like a test database or a dummy knowledge base) and verify the AI’s responses. If the AI is asked a question that requires data from that source, confirm that it actually uses the MCP-fetched data in its answer. Essentially, validate the "wiring" between the AI and external data.

- Use of Test Stubs/Mock Servers: In situations where connecting to a real external system is impractical (due to cost, security, or variability), consider using a mock MCP server. Since MCP is standardized, one can set up a dummy server that implements the MCP specification but serves controlled data. QA can then force the AI to use this test server to consistently supply certain context and validate outcomes. This helps in creating deterministic tests for an otherwise dynamic integration.

- Scenario and Context Variation Testing: Design test scenarios covering a wide range of contexts. For a given functionality, vary the external data conditions. For example, if testing an AI coding assistant using MCP to fetch documentation and code snippets, create scenarios like: “documentation has relevant info,” “documentation is missing that info,” “code repository has an example implementation,” etc. Verify how the AI responds in each case. This ensures the AI+MCP system is robust against different real-world situations.

- Negative and Edge Case Testing: Don’t just test the happy paths. Try scenarios where the context might be incomplete or misleading. For instance, remove a key piece of data from the external source and see if the AI can handle the situation (does it fail gracefully or produce an incorrect guess?). Similarly, feed unusual or extreme data via the context (like a very large document or a strangely formatted entry) to see if the system can handle it without errors or confusion.

- Monitoring and Logs Verification: MCP comes with built-in monitoring capabilities to track context flow. QA teams should leverage these if possible. After running tests, check the logs or audit trails to ensure the right data was fetched and used. For example, if a test expected the AI to retrieve a customer’s order history from an ERP system via MCP, the logs should show that specific query. This not only verifies correctness but also helps diagnose issues when the AI output is not as expected (it could reveal if the context fetch failed or pulled the wrong data).

- Collaboration with Developers and Data Owners: Because MCP spans AI and external data systems, QA should work closely with the development team and possibly the owners of the external systems. Understanding how the MCP integration is configured (what data sources, what access levels, update frequencies, etc.) will help design better tests. For instance, if an AI is connected to a Slack MCP server to fetch company chat context, knowing which channels or data are included will guide relevant test cases.

- Automation of Contextual Tests: Whenever possible, automate the testing of AI responses with varying contexts. This could mean writing automated scripts that set up a certain state in the MCP data source, then query the AI via its API or interface, and verify the output contains or reflects that state. Automation is important because MCP-based systems may need regression testing each time the external data or the model updates. Automated tests can quickly catch if a change in the data source or a new version of the model breaks the context integration logic.

Leveraging Test Collab for MCP Testing Projects

When dealing with complex systems that involve both AI behavior and multiple data integrations, a robust test management approach is essential. Test Collab is one such platform that can greatly assist QA teams in organizing and executing tests for MCP-based systems. Here’s how Test Collab’s features align with the needs of testing MCP integrations:

- Centralized Collaboration: Test Collab provides a single, shared repository for all testing artifacts – test cases, plans, requirements, and even team discussions, all in one hub. For MCP projects, this means testers, developers, and product managers can collaborate closely. For example, when creating a test plan for a new MCP integration, team members can comment on test cases directly in Test Collab (instead of long email threads). You can create collaborative test plans and assign them to groups of testers so they can work together seamlessly. This level of collaboration ensures everyone understands the context scenarios being tested and can contribute their insights (developers can review test cases to ensure all integration points are covered, etc.).

- Test Case Management & Organization: As a modern test case management tool, Test Collab helps you organize test suites in a logical way. For an MCP-based system, you might have test suites for “Context Retrieval”, “AI Response Accuracy”, “Security Permissions”, and so on. Test Collab supports custom fields and tags, allowing QA teams to label tests by context type or data source (for instance, tagging certain tests as “CRM data” or “GitHub integration”). This makes it easy to filter and run specific groups of tests when a particular integration is updated. It also supports linking test cases to requirements or user stories (useful to trace that all aspects of the MCP integration specified by the product are being verified).

- Integration with Development Tools: Testing doesn't happen in isolation. Tools like Test Collab integrate with popular project management and issue tracking systems such as JIRA. In practice, if a test for an MCP feature fails, Test Collab can directly push a bug to JIRA, ensuring developers get all the details. This tight integration saves time and ensures that context-related bugs (which might be complex) are well-documented and tracked. Furthermore, by supporting configurable test plans and scheduling, Test Collab can align with agile workflows common in AI/ML projects, where iterative development is the norm.

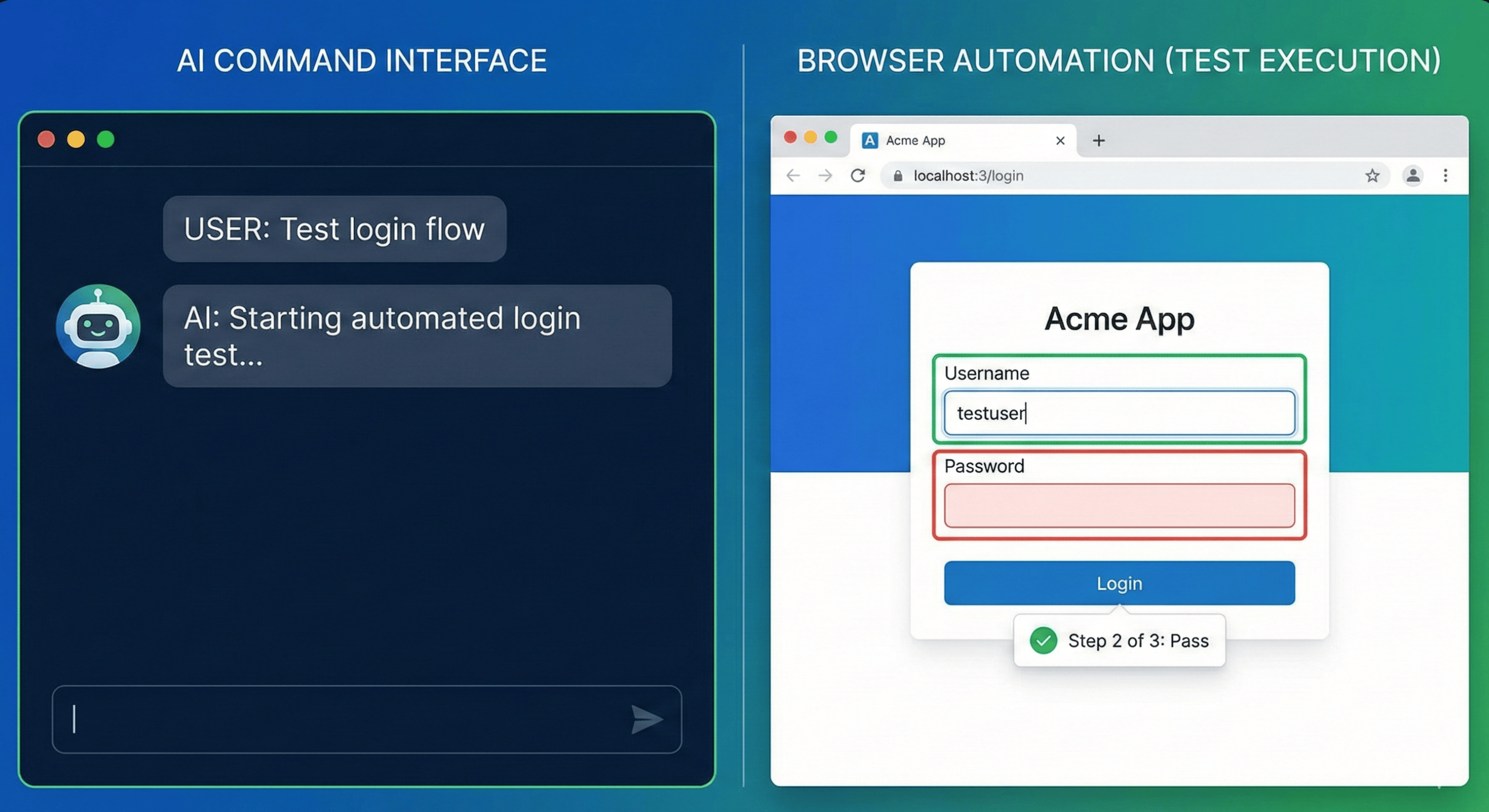

- Native MCP Server Support: TestCollab offers an MCP Server integration that connects AI coding assistants like Claude Code, Cursor, and Codex directly to your test management system. This means your AI assistant can create, query, and update test cases without leaving your IDE – the natural extension of MCP's promise to bridge AI with external tools.

- Automation and AI-Powered Testing: One standout feature of Test Collab is its QA Copilot – an AI-powered assistant for testing. QA Copilot enables no-code test automation, meaning testers can write test cases in plain English and have the tool generate and execute the test scripts automatically. This is particularly useful for MCP systems where you might want to quickly automate a variety of scenarios. For example, a tester could describe: “Verify the AI assistant can pull a file named ‘Budget.xlsx’ from the SharePoint MCP integration and answer a question about 2024 budget total” in plain language. The QA Copilot can then help generate a script to set up the file in SharePoint (or a mock), query the AI via the application’s interface, and validate the response – all without the tester writing code. Such AI-driven automation can dramatically speed up testing cycles and help cover more scenarios. Additionally, automatic browser execution and even self-healing tests (tests that adapt if the UI changes slightly) are features that can benefit testing of AI-driven apps, ensuring that the focus remains on testing the logic (like MCP data usage) rather than fighting with brittle test scripts.

- Collaborative Reporting and Iteration: After test execution, Test Collab provides results and reports that the whole team can review. This is crucial for MCP-based projects where issues might span across the AI and backend systems. Clear reporting helps pinpoint whether a failure was due to the AI logic, the MCP integration, or the external data source. Team members can discuss results within the platform, and because everything is centralized, revisiting a test case’s history (previous runs, changes, comments) is straightforward. This traceability is important for continuous improvement of both the testing process and the product quality.

In essence, Test Collab acts as the glue for QA teams, much like MCP is the glue between AI and data. It facilitates collaboration, keeps test efforts organized, and introduces intelligent automation to handle the complexities of modern AI systems. QA teams testing MCP-based applications can greatly benefit from such a platform to ensure nothing falls through the cracks and that they can keep up with the fast-paced, integrated nature of these projects.

Conclusion

The Model Context Protocol (MCP) represents a significant step forward in how AI/ML models interact with the world of data around them. For QA professionals, it’s not just a buzzword – it’s a technology that will influence how we test AI systems going forward. By understanding MCP’s purpose (a universal, context-bridging protocol) and its impact on development, QA teams can better prepare for the task of validating AI behaviors in rich, data-driven scenarios.

In summary: QA teams should familiarize themselves with MCP’s basics, anticipate changes in their testing workflows to include context and integration checks, and adopt best practices to tackle the new challenges it brings. Leveraging collaboration and test management tools like Test Collab can further empower QA to manage this complexity – coordinating efforts, keeping test cases structured, and even using AI to test AI. With the right knowledge and tools, QA professionals can ensure that MCP-based AI systems deliver reliable, accurate, and secure results – ultimately contributing to high-quality, trustworthy AI-powered applications.