Vibe coding is intoxicating. You describe what you want, and code appears. No syntax lookup, no boilerplate typing, just intent translated into implementation. But there's a problem lurking beneath the surface.

The Hallucination Gap

When AI doesn't have complete information, it does what any confident assistant does: it guesses. And AI is remarkably good at making guesses sound authoritative.

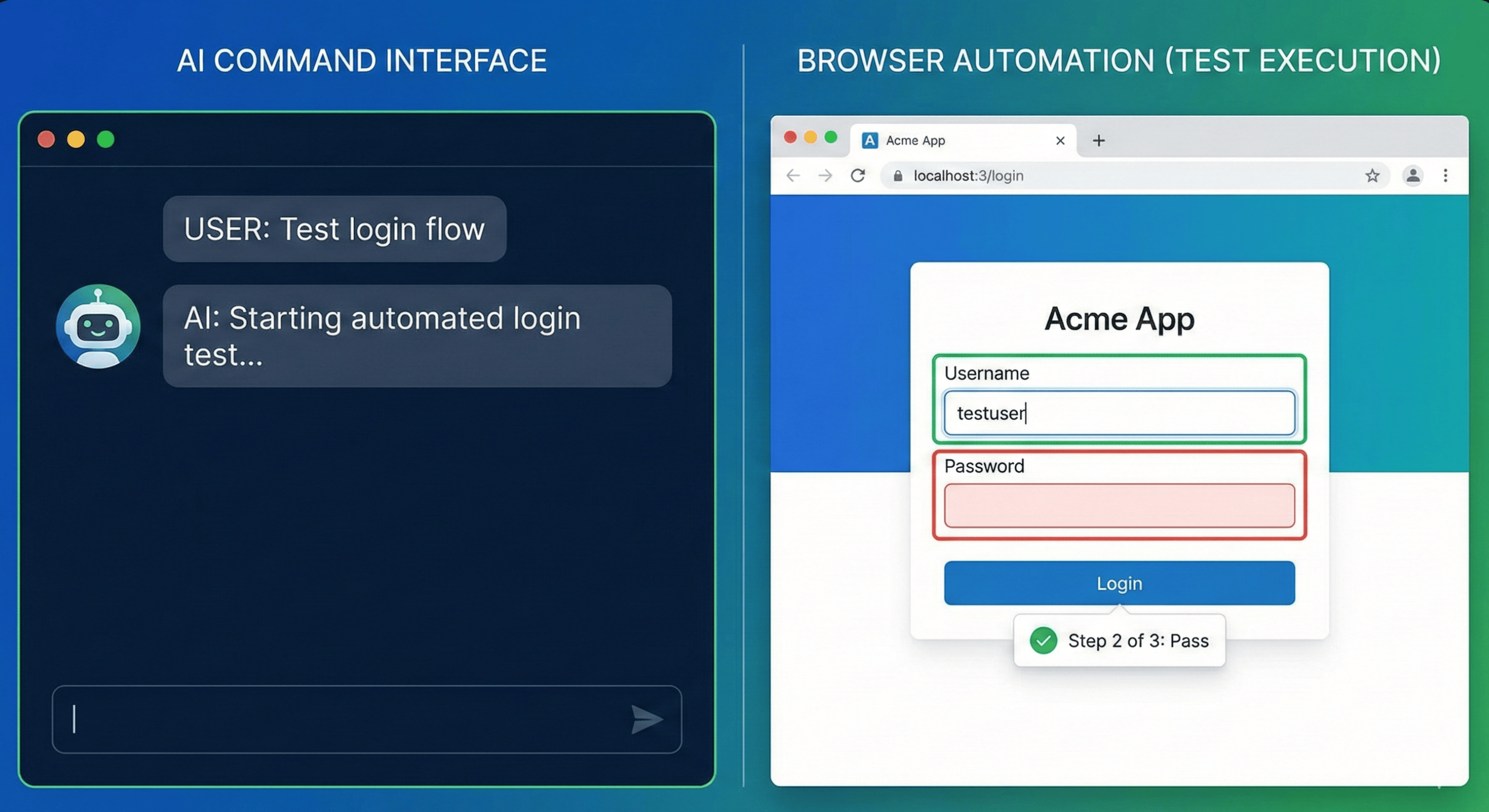

Ask your AI assistant to write tests for your login flow. Without access to your actual test cases, it will invent plausible-sounding scenarios based on generic patterns. It doesn't know that your authentication uses a specific edge case handling for expired tokens, or that you've already got comprehensive coverage for password validation. It's working blind.

The same pattern emerges everywhere. Refactoring a component? The AI doesn't know what tests cover it. Adding a feature? It can't check what test documentation already exists. Fixing a bug? It has no visibility into historical test failures.

This is the hallucination gap: the space between what AI confidently produces and what actually matches your project's reality.

Guardrails Through Integration

The fix isn't to abandon vibe coding. It's to give your AI access to your source of truth.

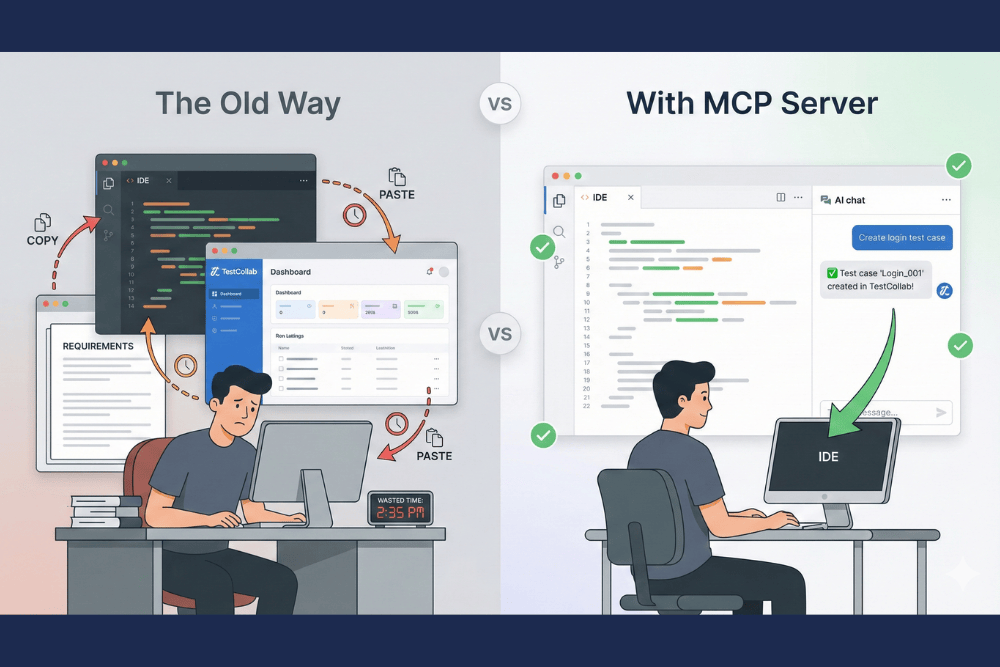

When you connect a test management tool like TestCollab to your AI coding environment via MCP (Model Context Protocol), something changes. Instead of inventing test scenarios, the AI can query your actual test library. Instead of guessing at coverage, it can check what exists. Instead of creating duplicate test cases, it can find and update existing ones.

The AI stops hallucinating because it has real data to work with.

This is what guardrails look like in practice. Not restrictions that slow you down, but context that keeps the AI grounded. You still get the speed of vibe coding, but the output aligns with your project's actual state.

How It Works

MCP creates a standardized way for AI assistants to connect with external tools. The TestCollab MCP Server exposes your test case library directly to Claude Code, Cursor, Windsurf, and other MCP-compatible clients.

When you're implementing a feature and ask for test cases, the AI queries your actual project. When you refactor code and want to update affected tests, it finds the real ones. When you're unsure about coverage, it gives you facts instead of fabrications.

The conversation changes from the AI imagining your testing landscape to the AI navigating your actual testing landscape.

Beyond Test Management

This pattern extends to any source of truth in your development workflow. Requirements in Jira. Documentation in Confluence. API specs in your repo. The more context your AI has access to, the less it needs to guess.

But test management is particularly high-leverage. Tests are the executable specification of your software. When AI has visibility into what's tested, what's passing, and what's covered, its suggestions become dramatically more useful.

The New Vibe Coding

Vibe coding with guardrails isn't slower than vibe coding without them. The AI still responds instantly. You still describe what you want in natural language. The difference is that what comes back is grounded in reality rather than plausible fiction.

If you're already using an AI coding assistant, connecting it to your test management system is one of the highest-impact integrations you can make. Setup takes a few minutes. The payoff is every future interaction being more accurate.

Check out the TestCollab MCP Server to get started, or visit our GitHub repository for setup instructions.

Stop letting your AI guess. Give it the context it needs.