"We tested it" means nothing without proof.

Every QA team has faced that moment: a bug resurfaces in production, an auditor asks for documentation, or a stakeholder questions whether a feature was actually validated. Without proper test evidence, you're left scrambling through Slack threads, email attachments, and shared drives hoping to find that one screenshot from three sprints ago.

A scalable test evidence strategy solves this. It ensures consistent collection, centralized storage, and instant retrieval - whether you're debugging a regression or preparing for a compliance audit.

What counts as test evidence

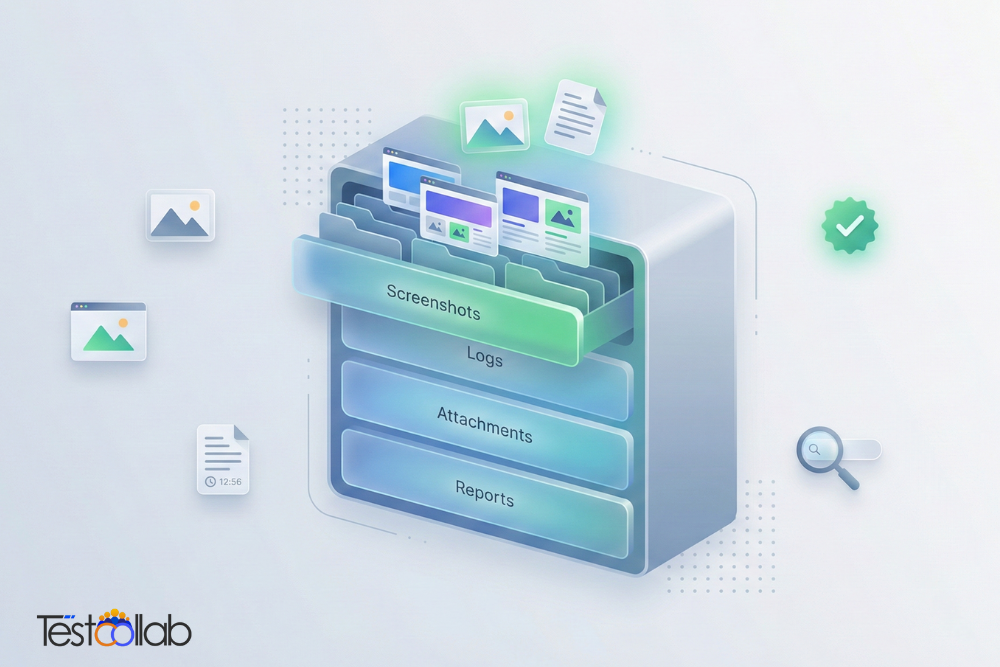

Test evidence is any artifact that proves testing occurred and documents the outcome. The five essential types are:

Screenshots and recordings. Visual proof of UI states, error messages, and failures. Critical for front-end testing and reproducing bugs.

Execution logs. Step-by-step records showing what passed, what failed, and why. These provide the technical detail developers need.

Attachments. Supporting files like test data, configuration snapshots, API responses, or exported reports.

Execution metadata. Who ran the test, when, on what environment, and using which configuration. Context that's often forgotten but always needed later.

Defect links. Direct connections between failed tests and bug tickets. This closes the loop between finding issues and fixing them.

Without all five, your evidence tells an incomplete story.

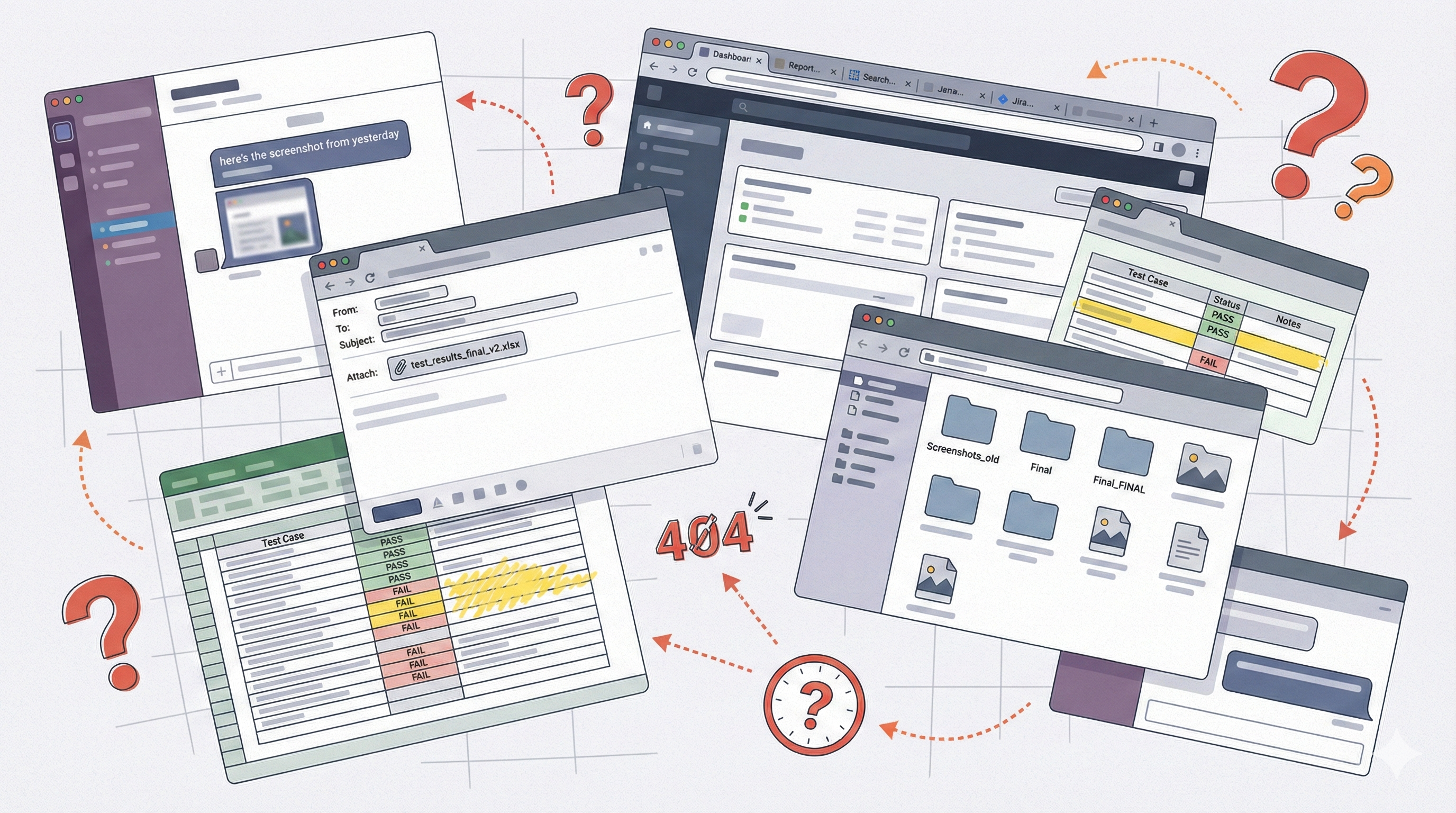

Why evidence collection breaks down

Most teams start with good intentions. They capture screenshots for the first few test cycles, attach logs when something fails, and document results somewhere. Then deadlines hit.

Manual capture is inconsistent. Some testers attach everything. Others mark tests as passed with no supporting evidence. There's no standard, so quality varies wildly.

Evidence scatters across tools. Screenshots live in Slack. Logs get emailed. Results sit in spreadsheets. Attachments end up in shared drives with folder names like "Finalv2FINAL". Finding anything requires archaeology.

No standard format exists. Even when evidence is captured, comparing across test runs becomes impossible. What was the environment last time? Who executed it? Good luck finding out.

Audits trigger panic. When compliance asks for proof of testing, teams spend days reconstructing what happened. Evidence that should take minutes to produce takes hours - if it can be found at all.

Building a strategy that scales

A scalable evidence strategy isn't about capturing more - it's about capturing consistently and storing centrally.

Standardize evidence requirements by test type. Define what each test category needs. Smoke tests might require only pass/fail status. UAT tests need screenshots of every critical screen. Regression tests need logs and environment details. Document these requirements and enforce them.

Use custom execution statuses to flag incomplete evidence. A status like "Needs Evidence Review" prevents tests from being marked complete until artifacts are attached.

Automate where possible. Manual evidence capture doesn't scale. Integrate your automation framework to capture screenshots on failure automatically. Use APIs to push execution results with artifacts attached. The less manual work required, the more consistent your evidence becomes.

Centralize in one system. Evidence scattered across five tools is evidence you can't find. Store everything in your test management platform—screenshots attached to test steps, logs linked to executions, defects connected to failures. One source of truth.

Link evidence to requirements. Traceability transforms evidence from "proof we tested something" to "proof we tested this specific requirement". When auditors ask about coverage, you can show exactly which tests validated each requirement and what the results were.

A proper requirements traceability matrix makes this automatic - every test links to requirements, and coverage reports show what's tested and what passed.

Measuring evidence quality

You can't improve what you don't measure. Track these metrics:

Evidence coverage. What percentage of test executions have the required artifacts attached? If only 60% of your tests have screenshots when your policy requires them, you have a process problem.

Time to evidence. How long after execution is evidence attached? Evidence captured during testing is accurate. Evidence reconstructed a week later is unreliable.

Audit readiness. Can you pull complete evidence for any requirement in under five minutes? If not, your retrieval process needs work.

Review these metrics monthly. They'll reveal whether your strategy is working or just documented.

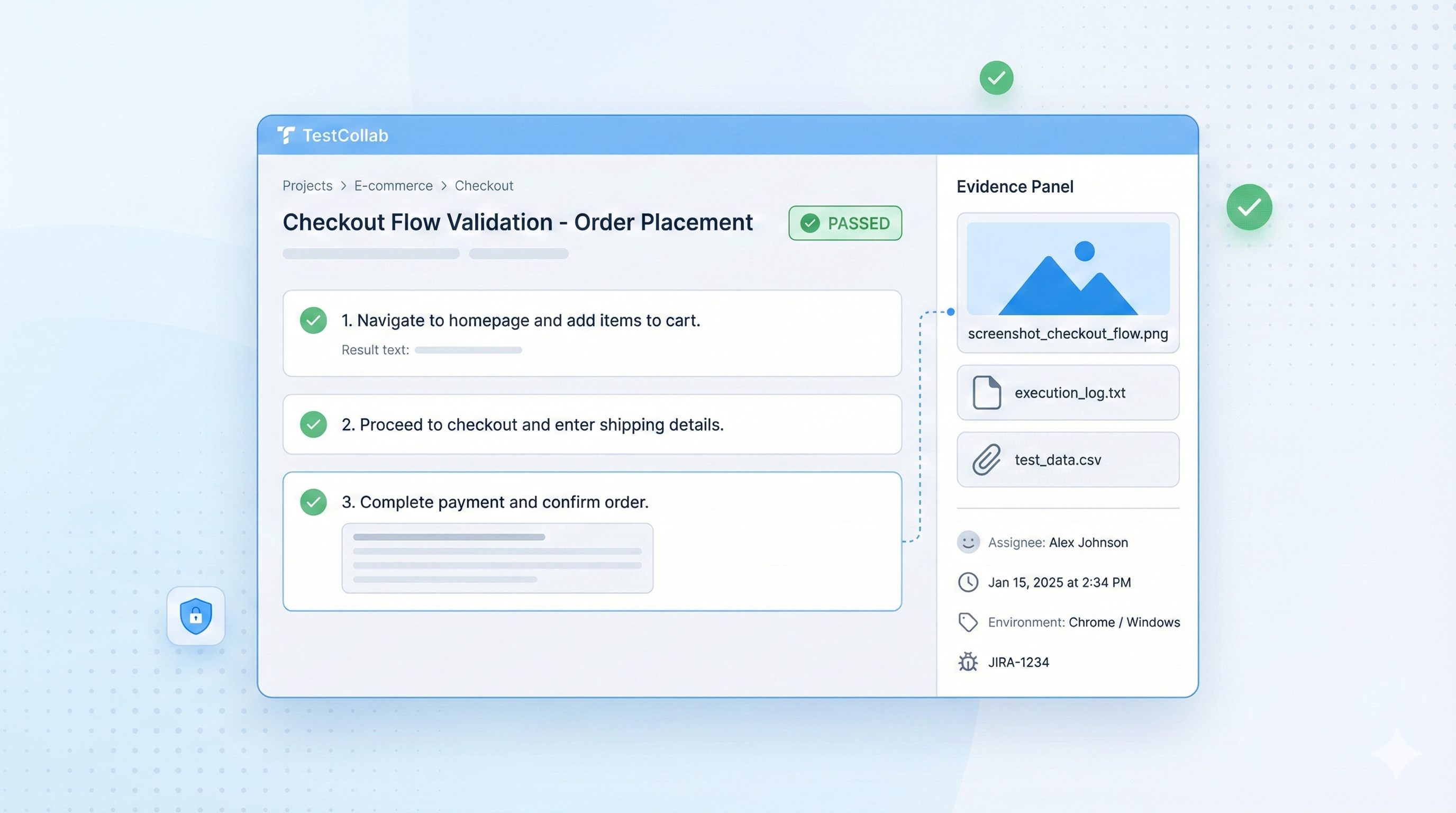

How TestCollab makes this effortless

TestCollab is built for teams who take test evidence seriously.

Attach evidence during execution. Screenshots, logs, and files attach directly to test steps - not in a separate system, but right where the testing happens. Every artifact is linked to the specific step it documents.

Custom execution statuses. Create statuses like "Needs Evidence Review" or "Pending Attachment" to enforce evidence requirements before tests can be marked complete. Your workflow, your rules.

Requirements traceability. Every test links to requirements. Coverage reports show what's tested, what passed, and when—without manual assembly. Pull audit-ready documentation in seconds.

Jira integration with automatic screenshots. When you log a defect, screenshots upload automatically. No copying files between systems, no broken links.

Audit-ready exports. Generate PDF and Excel reports with full execution history, attachments, and traceability. What used to take days now takes one click.

Stop losing evidence. Start your free trial and see how TestCollab keeps everything in one place.