AI has solved the blank page problem.

Cursor, Claude, Copilot - they can scaffold an entire feature in seconds. The friction of writing code is disappearing. And if you've felt a mix of excitement and unease watching this happen, you're not alone.

Here's what nobody is saying out loud: we've made creation cheap, but we haven't made correctness any easier.

In the old world, code was scarce. We wrote it line by line, debated architecture in pull requests, and treasured what we shipped. Testing happened at the end - a sanity check before release.

In the new world, code is abundant. AI generates it faster than any team can review. The bottleneck has shifted. The question is no longer "can we build it?" but "did we build the right thing?"

How do you know the AI didn't introduce a subtle security flaw?

How do you verify generated features actually match user needs?

Who checks the work when the work takes seconds?

When the cost of generating code drops to zero, the value of verifying it goes to infinity.

This changes what testing is.

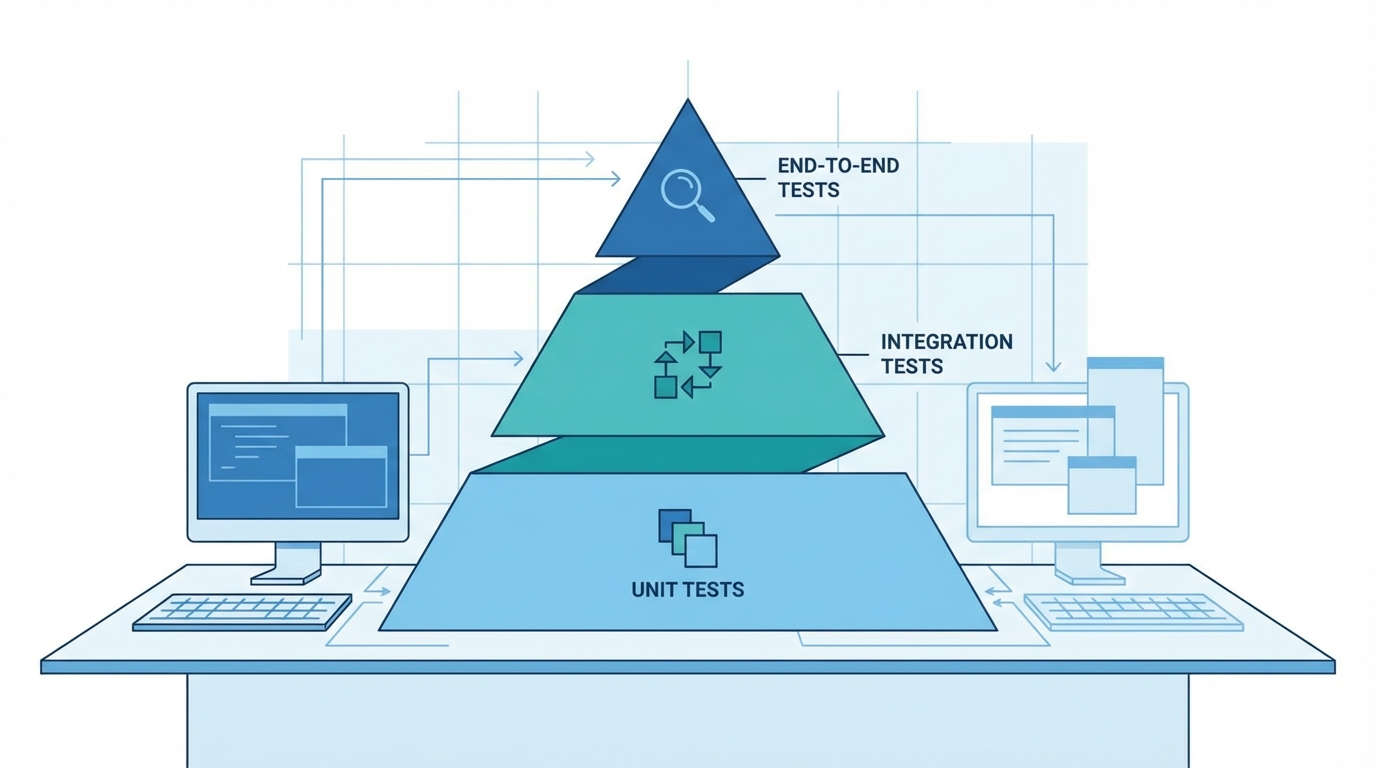

Testing is no longer about catching bugs at the end. It's about defining specifications at the start. If you can't write a test for it, the AI shouldn't build it.

The teams that win in this new era won't be the ones who prompt fastest. They'll be the ones who can clearly articulate what correct looks like - and prove that their systems meet that bar.

That's what we're building at Test Collab: not just a test management tool, but a place where teams collaborate to define the truth their software must satisfy.

The AI writes the code. You define what "right" means.